The Lane Marker Dataset

Automatically annotated lane markers using Lidar maps.

Dataset Code --- Website Code

Quick specs:

- Over 100,000 annotated images

- Annotations of over 100 meters

- Resolution of 1276 x 717 pixels

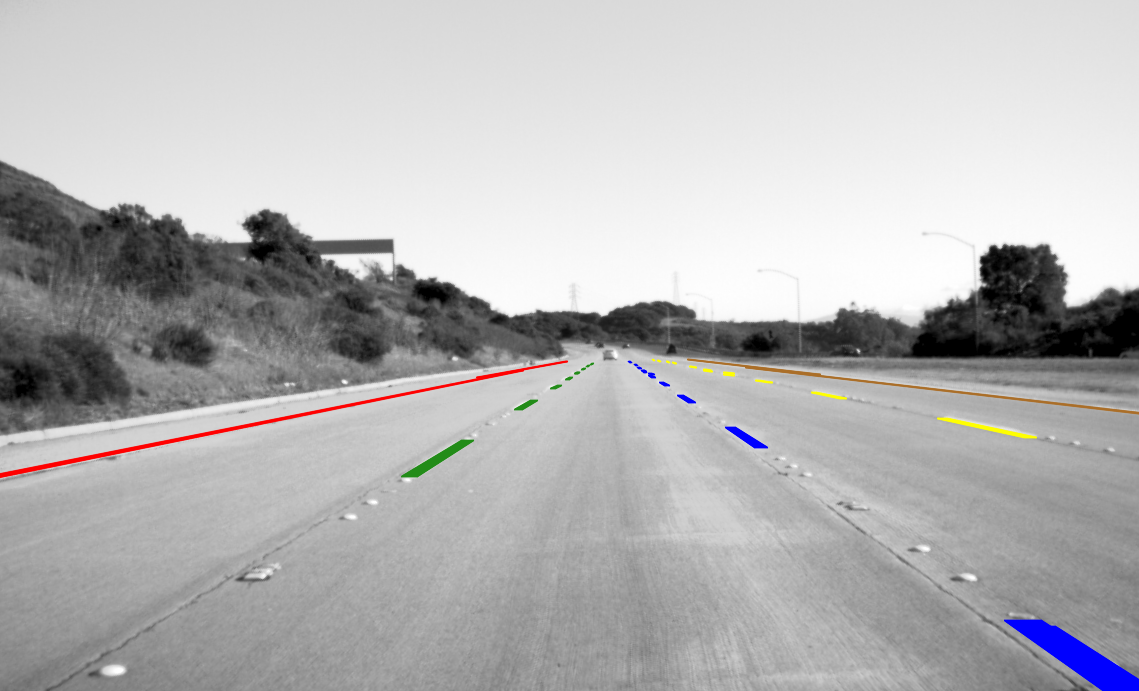

A Segmentation Challenge

Lane markers are tricky to annotate because of their median width of only 12 cm. At farther distances, the number of pixels gets very sparse and the markers start to blend with the asphalt in the camera image.

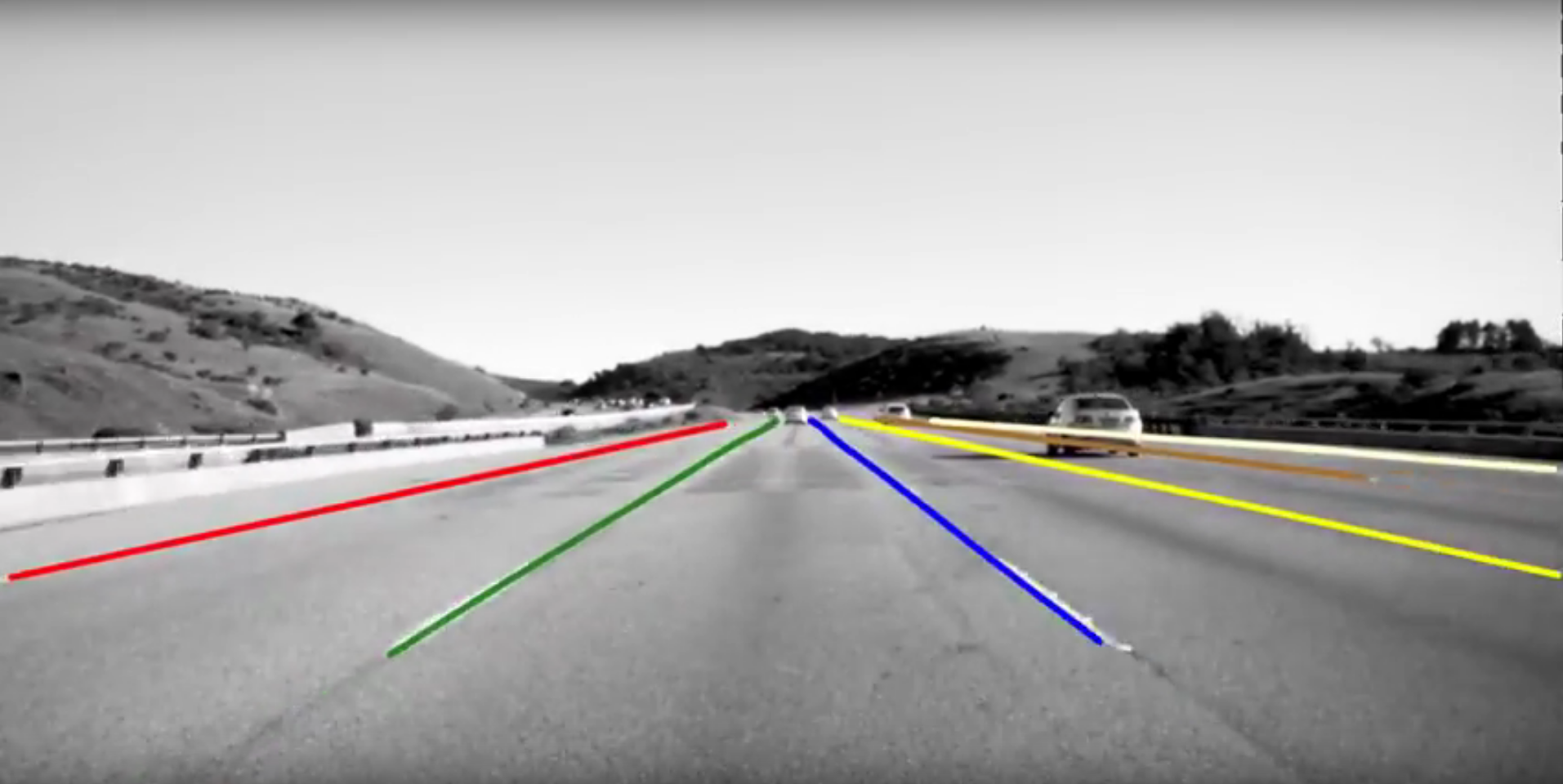

Lane Approximations

While pixel-level segmentation can be very useful for localization, some automated driving systems benefit from higher level representations such as splines, clothoids, or polynomials. This section of the dataset allows for evaluating existing and novel techniques.

Automated Annotations

The Unsupervised Llamas dataset was annotated by creating high definition maps for automated driving including lane markers based on Lidar. The automated vehicle can be localized against these maps and the lane markers are projected into the camera frame. The 3D projection is optimized by minimizing the difference between already detected markers in the image and projected ones. Further improvements can likely be achieved by using better detectors, optimizing difference metrics, and adding some temporal consistency. Dataset samples are shown in the video below.

Your Research

Try our benchmarks, use the data to train your own segmentation, lane detection, or create new metrics. We do encourage new benchmark suggestions. Let us know if additional data (e.g. odometry information, steering wheel angle) would be useful, and feel free to extend the dataset's scripts on Github.

Citation

@inproceedings{llamas2019,

title={Unsupervised Labeled Lane Markers Using Maps},

@inproceedings{llamas2019,

title={Unsupervised Labeled Lane Markers Using Maps},

author={Behrendt, Karsten and Soussan, Ryan},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

year={2019}

}